Hallucination in Agentic AI

Agentic AI systems are designed to reason, plan, and act autonomously.

But without strong controls, they introduce a critical risk: hallucination.

In agentic systems, hallucination is not just a wrong answer —

it can lead to incorrect actions, policy violations, and real-world damage.

At DeepFinery, eliminating hallucination is not an afterthought.

It is a core architectural principle.

What is hallucination in agentic AI?

Hallucination occurs when an AI system:

- Generates information that is not grounded in facts or rules

- Makes decisions that violate known policies or constraints

- Invents reasoning steps that cannot be verified

- Acts confidently despite missing or incomplete information

In agentic AI, hallucination is more dangerous because:

- Decisions may impact money, compliance, customers, or operations

- The system acts, not just responds

- Errors can cascade across steps

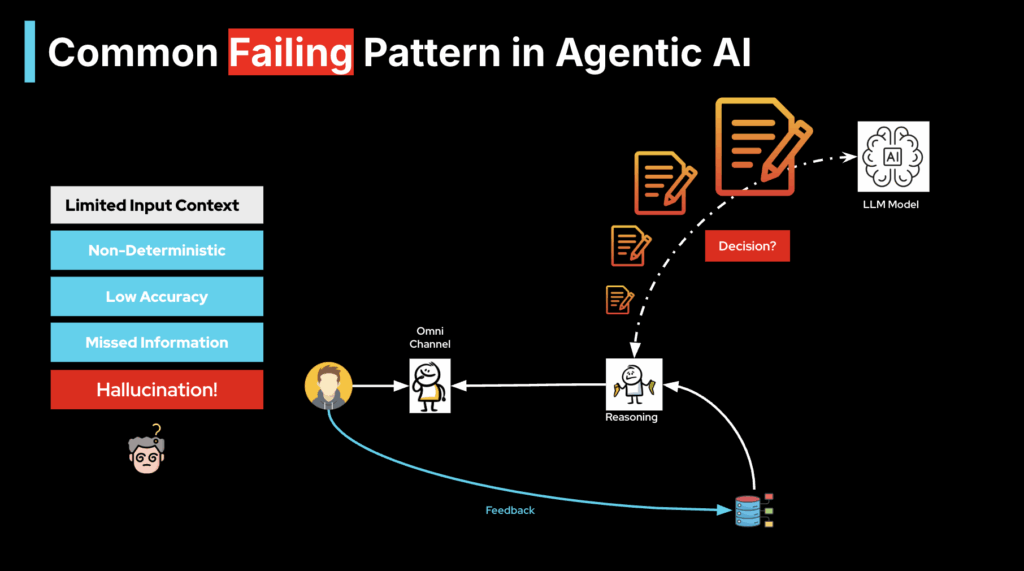

Why traditional AI approaches fail

Most agentic AI systems today rely on:

- Large language models

- Probabilistic reasoning

- Prompt-based control

- Post-hoc validation

These approaches struggle because:

- Neural models approximate behavior, they do not enforce logic

- Prompts are not guarantees

- Output validation happens after reasoning

- There is no executable definition of “allowed behavior”

As a result, hallucination becomes unavoidable at scale.

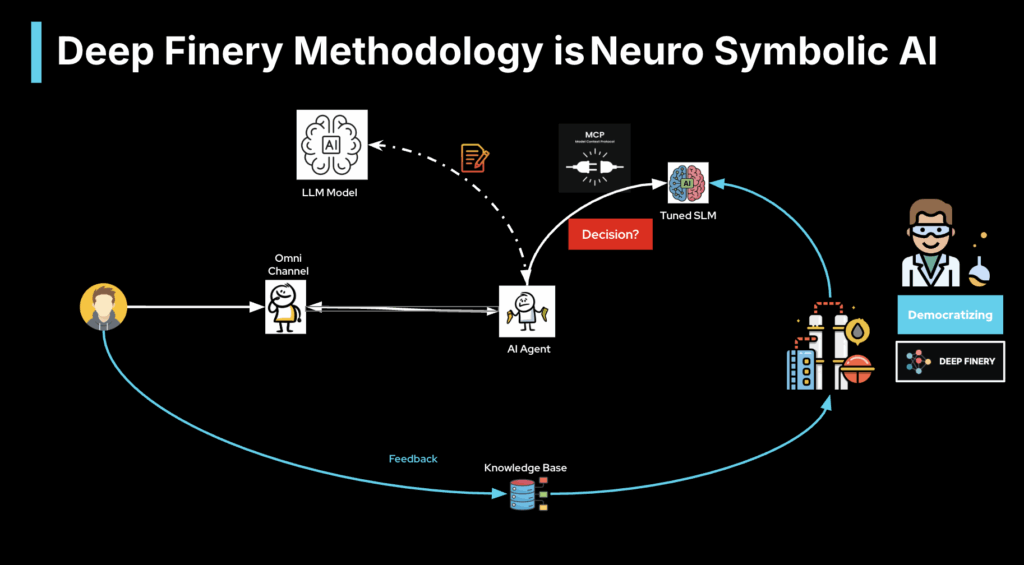

DeepFinery’s approach: hallucination prevention by design

DeepFinery addresses hallucination at the source, not the output.

We combine Neuro-Symbolic AI with deterministic execution to ensure that agent behavior is bounded, verifiable, and correct.

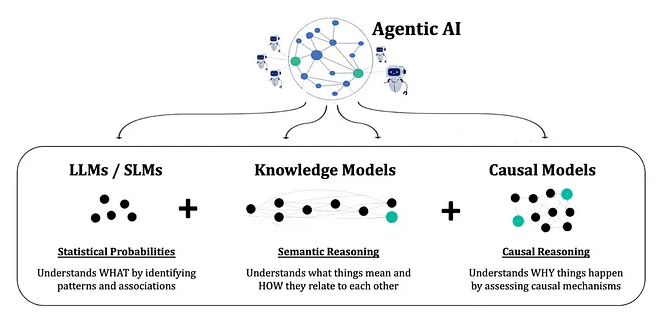

Neuro-Symbolic AI: the foundation

Neuro-Symbolic AI combines:

Symbolic intelligence

- Business rules

- Policies

- Constraints

- Decision logic

- Domain semantics

Neural intelligence

- Pattern recognition

- Ranking and scoring

- Natural language understanding

- Statistical learning

At DeepFinery:

- Rules are executable

- Logic is explicit

- Neural models operate within defined boundaries

This removes the conditions under which hallucination occurs.

How DeepFinery eliminates hallucination in agentic AI

1. Deterministic decision boundaries

Agent actions are constrained by symbolic rules and policies.

If an action is not allowed by logic:

- It cannot be taken

- It cannot be generated

- It cannot be hallucinated

2. Verified reasoning paths

Every decision step is:

- Traceable

- Explainable

- Auditable

Agents cannot invent reasoning chains.

They must follow explicit, verifiable logic.

3. Separation of reasoning and execution

Neural models may:

- Interpret inputs

- Rank options

- Extract signals

But final decisions are enforced symbolically.

This ensures:

- Consistency

- Compliance

- Predictable behavior

4. Continuous constraint checking

DeepFinery agents are continuously checked against:

- Policy constraints

- Regulatory rules

- Business logic

- Safety boundaries

If a neural suggestion violates constraints:

- It is rejected

- The agent falls back to deterministic logic

5. Synthetic data grounded in logic

Training data is generated from rules and semantics, not guesses.

This means:

- Models learn correct behavior

- Edge cases are explicitly covered

- There is no drift away from policy intent

What this means for agentic AI

With DeepFinery:

- Agents do not hallucinate actions

- Decisions are reproducible

- Behavior is predictable under all conditions

- AI systems can be trusted in high-stakes environments

This enables agentic AI to move from experimentation to production reality.

Built for regulated and mission-critical domains

DeepFinery’s hallucination-resistant architecture is designed for environments where mistakes are unacceptable:

- Financial services

- Fraud and AML

- Risk and compliance

- Insurance and claims

- Government and policy enforcement

- Enterprise automation

From probabilistic agents to reliable systems

Hallucination is not a tuning problem.

It is an architectural problem.

DeepFinery solves it by combining:

- Deterministic logic

- Symbolic constraints

- Neural adaptability

- Verifiable execution

The result is agentic AI that acts with intelligence and certainty.

Hallucination is optional. Control is not.

DeepFinery delivers agentic AI you can trust.